A legacy of face-to-face interviewing in high quality survey research

This paper considers the importance of face-to face fieldwork for a number of media currencies which seek to conduct a high-quality survey among the general population and how we can identify opportunities to support this with the use of online data collection.

The label, ‘high-quality’ describes several attributes that are agreed to be necessities for these studies, including the requirement to ensure overall representivity using address based, random probability or quota sampling, and the need to ensure reliability of the estimates produced. This includes both accuracy and consistency and often requires the ability to analyse at a highly granular level.

For such studies, a face-to-face fieldwork methodology has generally been seen to be the most appropriate. While there is a premium to be paid for face-to-face interviewing, the benefits attained from this are deemed to be worthwhile and, in particular, the ability of face-to-face interviewers to attain the best levels of response.

There are other clear benefits:

- A face-to-face interviewer is often best placed to administer the interview, particularly one that includes complex or unfamiliar components and which may require a detailed explanation

- Interviewers can manage participant interest in

- the research and engagement over the course

- of a lengthy interview

A general trend of falling response rates caused by societal changes is putting these studies under increasing pressure to ensure that previous levels of participation, representation and volume of responses are maintained.

Declines in response are the result in lower levels of cooperation and increases in the proportion of the general population who have less availability to complete an interview. This has led to interviewers having to work ever harder to encourage people to respond, making more visits or working a higher proportion of unsocial hours.

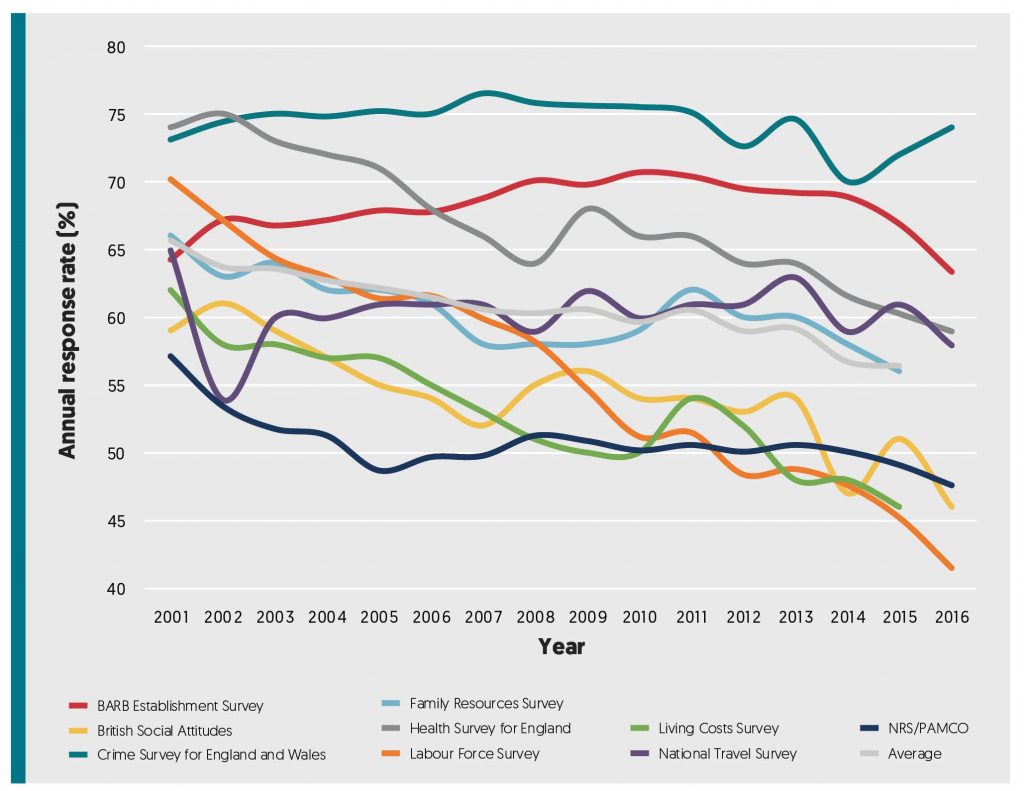

Chart 1 shows the general trend of response rates on face-to-face surveys since 2001 by comparing several long-running studies.

Chart 1: F2F survey response rates by year – 2001+

The decline in average response rates over the past 15 years is clear, it has happened largely despite significant increases in effort and costs and raises a key question for us, namely: what can be done to support these studies and the interviewers who work on them?

The option to explore a mixed-mode design

We believe that one option is to carefully consider whether a mixed-mode data collection approach would offer a better overall outcome.

Mixed-mode approaches have been developing for some time and offer an opportunity to support response rates in different ways. Of the alternative modes available, self-administered, online data collection is notable in that it is capable of using the same address-based sampling processes currently applied to these studies, allowing a true, mixed-mode offer to be made to the same sample of homes.

Indeed, self-administered, online surveys have been proposed as a simple replacement to some of those currently completed using face-to-face interviewers, however, an existing study with a complex research design is not easily transferred in this way and a change of mode is most likely to apply to studies where there is significant flexibility to simplify the research requirements.

Self-administered, online data collection is unlikely to be suitable for all types of survey tasks and careful assessment of participant requirements is essential to determine where this may best be applied. In addition, any switch from a single to a mixed-mode design would introduce complexities such that a comprehensive programme of testing would need to be undertaken to identify and understand the implications for data processing and reporting. Despite this, however, we believe that there will be many occasions where data can be collected, online, in a way that satisfies all the requirements for accuracy and consistency and allows a combined data set to be fully integrated without a loss of quality. The challenge for us, therefore, is to find the best opportunities to utilise a face-to-face and online mixed-mode methodology in a way that maximises any benefits to be had and minimises any disruptive effects.

The addition of online provides different options with different associated outcomes:

‘Online First’: online data collection from address based samples (as distinct from online access panels) is sometimes referred to as Push-to-web, referring to the process of approaching a sampled address using an offline method (usually by post) and asking them to complete an online questionnaire. This approach works best where the target population has a high penetration of online users and is usually followed up by a secondary mode, either a self-completion paper questionnaire or, in examples being considered here, a face-to-face follow-up.

‘Online second’: where this order is reversed and unproductive outcomes from a face-to-face approach are followed up with a mailed request to complete the survey online. A key factor in the application of an online second approach is identifying the point at which the yield from additional face-to-face visits becomes too low to justify the additional expense incurred. At this point a switch to an online mode may remain viable and may also appeal to a different set of people within the residual sample group.

Importantly, there would be a single, shared sample used by both approaches such that use of the secondary mode would only approach sample that had not been able to achieve a decisive, final outcome at the first stage. Sample that would remain at a second stage, therefore, would generally consist of non-contacts and soft refusals.

Assessing the potential impact on response

Impact on response will depend on whether we adopt an Online first or Online second approach. For both it is likely that response at the first stage will either be well understood (where face-to-face is used first) or that it should be possible to estimate from existing studies (for online). The follow-up stage is likely to be more difficult to assess before the completion of any pilot work, given that it will be largely dependent on the earlier stage.

The introduction of an Online first approach, would introduce larger changes to an existing face-to-face study. Undertaking the online component as a first stage can be estimated from a range of existing studies, however, final response levels would also be heavily dependent upon some key design considerations, such as: the materials produced, use of an incentive, the online experience, subject matter and the survey requirements.

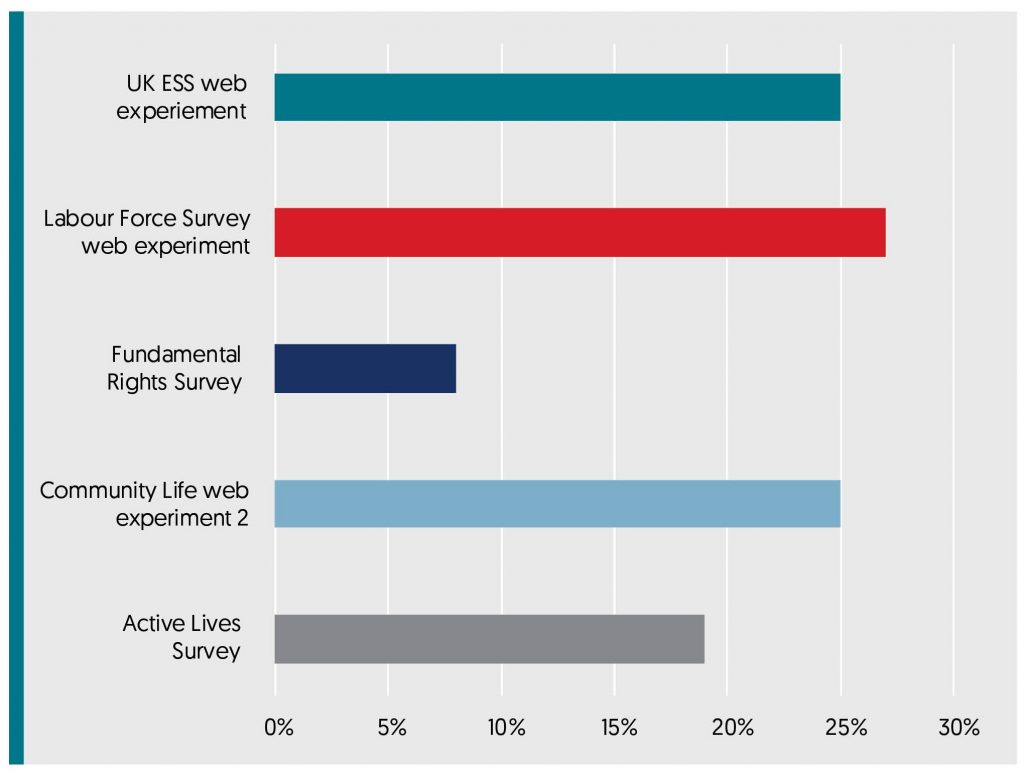

Chart 2: Response achieved from recent online studies using offline (push-to-web) samples

UK ESS web experiment

- Run by NatCen on behalf of City University London

- Fieldwork ran from Sept 2012 to February 2013

- Random Probability Sample (RPS) from Royal Mail Postal Address File (PAF)

- 3 mailings (invitation and 2 reminders)

- Various experiments with incentives

- Average response for Online Only = 25% (Online + F2F = 38%)

Labour Force Survey web experiment

- Run by Ipsos MORI on behalf of ONS

- Fieldwork ran through Q2 and Q3 2017

- RPS from PAF

- 3 mailings (including pre-notification, invitation and reminder)

- Various experiments with incentives

- Response range from 19-27% depending on incentives use

Fundamental Rights Survey

- Run by Ipsos MORI on behalf of FRA

- Fieldwork ran through Q3 2017

- RPS from PAF

- 3 mailings (invitation and 2 reminders)

- £5 conditional incentive

- Response of 8%

Community Life web experiment 2

- Run by TNS BMRB on behalf of the Cabinet Office

- Fieldwork ran through 2013/14 annual survey year

- RPS from PAF

- 3 mailings (invitation and 2 reminders)

- £10 conditional incentive

- Response of 25% for online only option

- (online plus post = 28% – paper version

- available on request)

Active Lives Survey

- Run by Ipsos MORI on behalf of Sport England

- Fieldwork started in November 2015

- RPS from PAF

- 4 mailings (invitation and up to 3 reminders)

- £5 conditional incentive

- Address level response of 19% (online plus post – paper version sent with 2nd reminder)

Assessing the impact to the subsequent, face-to-face activity would also be conditional on several factors. There will be effects from stripping out a large portion of the easier to achieve interviews from an engaged group of participants and pre-contacting all homes by letter, including the possibility of several reminders, may also fix the attitudes of a small group against participation.

There is no doubt that the addition of an option to complete an interview before any interviewer visits are made will alter this task and, importantly, the productivity we should expect from an interviewer. The critical factor will be whether the output from a two-stage (online first, face-to-face second) approach can materially outperform that achieved from face-to-face only. While we wouldn’t expect the face-to-face stage to generate the same quantity of interviews, in targeting their efforts on a smaller ‘residual’ sample of homes, we may find that interviewers can be more efficient at generating responses from among these. This may result in higher aggregate response levels or in a reduction in the number of visits required to be made by interviewers. These later visits are characterised by very low productivity levels and a high, per interview cost.

Looked at in isolation, introducing an earlier, online, stage will make the interviewer’s task more difficult, not easier both in terms of strike rates achieved and cost per interview. Because of this, it will be important that interviewers understand the reasoning behind any introduction of a preceding stage. What we need from them, how we judge their performance and how they are remunerated will rely, in a large part, on the interviews generated from the online attempt.

In comparison, adopting an Online second approach leaves the face-to-face stage unaffected. The original response rate achieved from face-to-face interviewing will be well understood and, from this, it is possible to calculate the proportion of the total sample likely to be included in the online follow-up. As above, the response to the mailed invitation would depend upon the specifics of the survey; the quality of the materials, the use of any incentive and the online experience, however, in this case the characteristics of the remaining sample will also be a key feature.

Over and above the quantity of response, we should also consider the ability of a follow-up mode to improve overall representivity. Any combined data set, in providing more options for completion, is likely to include low and non response groups from a face-to-face only mode. This may result from a difference in ‘appeal’ (or preference for online over face-to-face), the inability of an interviewer to make contact with the home (due to low availability) or the inability/ unwillingness of a participant to complete the interview at the time the interviewer has made contact (not convenient).

As with Online first, comprehensive testing will need to be undertaken to judge the overall benefit on response from adopting any mixed mode approach.

Challenges associated with an online approach

There are several benefits attributable to the use of an interviewer, each of which need to be addressed when a survey is transferred online. Alternative mechanisms will need to be agreed for any surveys which are considering the incorporation of online data collection.

Person selection: where there is a requirement to select an individual within a household, a rigorous selection process will need to be established. The application of detailed rules for selection are often confusing when self-administered and may be short-cut by participants who don’t comprehend their purpose. Some loss of representivity may result, which needs to be understood and a balance needs to be struck in the way these types of processes are designed.

Quotas: for quota surveys, there will be little overall control on the responses being generated. Additional controls will need to be built into the face-to-face element or into the original sampling processes to balance this out. Again, testing of the likely biases in response will need to be undertaken to enable suitable modifications to be agreed.

Interviewer effect: complex ideas don’t often translate easily into standard questions with limited, stock responses, and this is a key area where an interviewer, with an understanding of the research objectives, can provide as much clarification as may be needed to assist a participant in answering questions as intended, ensuring greater consistency of response.

Conversely, interviewers can also lead to biases when asking sensitive questions and these are often self-administered even within an interviewer administered survey.

In addition to the impact we can attribute to interviewers, Mode effect will also need to be addressed. Even within a single mode it can be difficult to design questions which are clear and have a single meaning and we would expect the use of a different mode to produce a greater range of differences; even where questions have been deliberately designed to be as consistent (‘mode neutral’) as possible. Therefore, mode effects are likely to lead to different estimates being generated and the process to combine these will require an assessment of an impact on the final survey estimates.

The time and attention put into the design of the online version of a questionnaire will be key and the use of cognitive testing of questions should be considered to help understand the variance in understanding and divergence in response that a question may elicit.

What can we expect from a mixed mode approach

At present, mixed-mode approaches are still relatively uncommon, precisely because the individual considerations of each study and the range of options available means that there are few guarantees. There is comparatively little statistical evidence on combined response rates, leaving much for us still to learn, however, this should not prevent us from exploring the opportunities where there is a compelling argument for doing so.

Mixed-mode is becoming more of a consideration at the survey design stage, driven by a desire to support response rates and an acceptance that additional visits by a face-to-face interviewer may no longer be the best option here, especially where increasing fieldwork costs are considered. Projecting the fall in response further ahead also raises the possibility that, even where a mixed-mode may not currently pose an optimum solution, there is an ever-strengthening argument for considering the time when this may change.

The main benefits attributable to a mixed-mode approach are:

- Increases in response

- Improved representivity, not just in terms of overall response rate but, crucially, by targeting those people that a face-to-face interviewer may struggle to find

- A reduction in cost, either by preventing the need for extra visits from a face-to-face interviewer, or by removing a substantial group of those that are among the easiest to interview, and so allowing interviewers to more productively direct their efforts at the remaining sample

For any survey considering the adoption of a mixed-mode approach, the key will be the use of a comprehensive programme of testing to assess the degree to which these benefits may be realised at the survey level.

Conclusion

Face-to-face as a method isn’t going to be going away anytime soon, it remains the best way to achieve a high-quality outcome producing consistent estimates. However, we are faced with the situation where costs are increasing at the same time that response rates are falling.

These changes in the research landscape make it a good time to consider the options we have available in terms of research design and, where we continue to require a high quality, representative sample, drawn from a well-maintained frame of addresses, the combining of face-to-face with online data collection would seem to offer a flexible approach that will benefit a number of surveys, enabling us to maintain survey quality in an efficient and sustainable manner.